整体流程:

# 文件夹biaoding处理加crop以及生成.yml系列文件,保存在calib_params以及biaoding_pipeline文件夹中

0_test_calibprocess.sh

# 内参标定,往往需要多天数据,且要保证标定板出现的多样性以及cover大部分区域

1_calib_intrics.sh

# 外参标定,使用混合的内参对单天数据进行外参标定,最好loss在0.000x

1_calib_extrics.sh

# 修改anchor.yaml相机信息进行15标定,loss 100以下,A88参考为50左右

2_test_merge.sh

# 选择数据送标anchor,返回后, loss 0.00x, 不准基本就是anchor标错或者方向盘等位置发生运动

python tools/display_tags.py --anchor_path /mnt/.../anchor

3_test_anchors.sh

# 检查anchor的3d位置是否正确

# 首先根据点位加入颜色

python 3_addcolor_anchor.py

# 生成A柱相机坐标轴可视化文件

python tools/convert_camparam_to_txt.py /mnt/lustressd/share/taoye/data/A79/collections/calib_params/20221008/multiCamParams20221008.yml_with56.yaml

# 本机可视化

sudo cloudcompare.CloudCompare

# 3d lmks 计算,不用等anchor标定

5_test_collections.sh

# camera1 lmks计算加剪裁,在5步骤之后,分两步,先lmks计算后crop

6_newlmks_crop.sh

# script文件夹下进行睁闭眼判定

ftp://10.5.36.170/lustressd/share/taoye/projs/scripts/test_open_eye.sh

# camera1 计算head3d 先填写FaceFusion/models/camera_A88.json相机内外参

7_get_head3d.sh

# 按步骤生成json文件,要进行相应的配置:

gen_json/0_gen_list_ldmks3d.py

gen_json/1_test_iris3d.py

# 测试组测试集video2img:

10_video2img.py

# 处理测试返回xlsx表格

10_process_xlsx.py

# 上传量化

mec-cloud file upload -p <local_path> -r <remote path>

0. 数据预处理

这个步骤主要是crop四路数据,及生成后续步骤所需要的yaml文件。

1. 四路相机与双路相机标定

内参标定

import cv2

import numpy as np

import yaml

class CameraCalibrationIntrics:

def __init__(self, config):

# 初始化参数

self.configs_chessboard = None

self.load_config(config)

self.board_height = 0

self.board_width = 0

self.board_square_size = 0

self.board_size = None

self.board_3d = None

self.board3d = []

self.cameras_num = 0

self.intrs = []

self.extrs = []

self.dists = []

self.frames_num = 0

self.valid = []

self.loss = []

self.imgSize = []

self.obj_pts = []

def load_config(self, config_path):

def load_yaml(file_path):

with open(file_path, 'r') as yaml_file:

try:

data = yaml.safe_load(yaml_file)

return data

except yaml.YAMLError as e:

print(f"Error loading YAML file: {e}")

return None

yaml_data = load_yaml(config_path)

if yaml_data:

print("YAML data loaded successfully:")

self.configs_chessboard = yaml_data

else:

print("Failed to load YAML data.")

def init_params(self):

# 初始化参数

print("InitParams ")

self.board_height = self.configs_chessboard["board_height"]

self.board_width = self.configs_chessboard["board_width"]

self.board_square_size = self.configs_chessboard["board_square_size"]

self.board_size = [self.board_width, self.board_height]

self.board_3d = np.zeros((3, self.board_height * self.board_width))

for i in range(self.board_height):

for j in range(self.board_width):

self.board_3d[:, j + i * self.board_width] = np.array([j, i, 0]) * self.board_square_size

for r in range(self.configs_chessboard["board_height"]):

for c in range(self.configs_chessboard["board_width"]):

p = np.array([c * self.configs_chessboard["board_square_size"], r * self.configs_chessboard["board_square_size"], 0.0])

self.board3d.append(p.tolist())

self.cameras_num = self.configs_chessboard["cameras_num"]

self.intrs = [np.eye(3, 3) for _ in range(self.cameras_num)]

self.extrs = [np.eye(4, 4) for _ in range(self.cameras_num)]

self.dists = [[0] * 8 for _ in range(self.cameras_num)]

self.frames_num = self.configs_chessboard["frames_num"]

self.valid = [True] * self.frames_num

self.obj_pts = list(self.board_3d.flatten())

return "SUCCESS"

def run(self):

print("Run ")

if self.calib_intr_for_every_camera() != "SUCCESS":

return "COMMONFAILED"

self.save_params()

def calib_intr_for_every_camera(self):

print("开始进行每个相机的内参标定 ")

dir_paths = [self.configs_chessboard["dataPath"], self.configs_chessboard["imgFileNameFormat"]]

for i in range(self.configs_chessboard["cameras_num"]):

self.intrs[i] = np.eye(3, 3)

self.extrs[i] = np.eye(4, 4)

obj_pts = []

img_pts = []

ptsptr = [self.board_3d.ctypes.data]

img_pts_ = np.zeros(self.frames_num * self.board_3d.shape[1] * 2)

self.imgSize.append([0, 0])

camera_nums = self.configs_chessboard["camera_nums"][i]

start = 0

end = 0

if self.configs_chessboard["intric_calib"] == "fourCams":

for _ in range(i):

start += self.configs_chessboard["camera_nums"][_]

end = start + self.configs_chessboard["camera_nums"][i]

elif self.configs_chessboard["intric_calib"] == "stereoCams":

end = start + self.configs_chessboard["camera_nums"][i]

else:

return "COMMONFAILED"

print(f"相机:{i + 1} 起始:{start} 结束:{end}")

for j in range(start, end):

img = cv2.imread(dir_paths[0] + dir_paths[1].format(i + self.configs_chessboard["cameraIndexOffset"], j), cv2.IMREAD_COLOR)

if img is None:

continue

if self.imgSize[0][0] * self.imgSize[0][1] <= 0:

self.imgSize[0] = img.shape[:2][::-1]

elif self.imgSize[0] != img.shape[:2][::-1]:

img = cv2.resize(img, self.imgSize[0], interpolation=cv2.INTER_LINEAR)

pts = cv2.findChessboardCorners(img, (self.configs_chessboard["board_width"], self.configs_chessboard["board_height"]))[1]

if pts is None or len(pts) < self.configs_chessboard["board_width"] * self.configs_chessboard["board_height"]:

continue

pts = pts.reshape(len(pts), 2)

test_img = img.copy()

cv2.drawChessboardCorners(test_img, (self.configs_chessboard["board_width"], self.configs_chessboard["board_height"]), pts, True)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

cv2.cornerSubPix(gray, pts, (12, 12), (-1, -1), (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_COUNT, 30, 0.1))

flag, errors_corners = self.is_corners_valid(pts, (self.configs_chessboard["board_width"], self.configs_chessboard["board_height"]))

if not flag:

self.draw_pts(img, pts, self.board_size, errors_corners)

cv2.imwrite(dir_paths[0] + dir_paths[1].format(i + self.configs_chessboard["cameraIndexOffset"], j) + "_corner_subpixel_error.png", img)

continue

self.draw_pts(img, pts, (self.configs_chessboard["board_width"], self.configs_chessboard["board_height"]), errors_corners)

cv2.imwrite(dir_paths[0] + dir_paths[1].format(i + self.configs_chessboard["cameraIndexOffset"], j) + "_corner_subpixel.png", img)

img_pts.append(pts)

img_pts_[2 * (self.board_3d.shape[1] * len(obj_pts) + np.arange(pts.shape[0]))] = pts[:, 0]

img_pts_[2 * (self.board_3d.shape[1] * len(obj_pts) + np.arange(pts.shape[0])) + 1] = pts[:, 1]

ptsptr.append(img_pts_.ctypes.data + 2 * self.board_3d.shape[1] * len(obj_pts))

obj_pts.append(self.board_3d.T.astype(np.float32))

det, intr, dist, _, _ = cv2.calibrateCamera(obj_pts, img_pts, self.imgSize[0], None, None)

self.loss.append(det)

self.intrs[i] = intr

self.dists[i] = dist.tolist()

return "SUCCESS"

def draw_pts(self, img, pts, board_wh, errors):

line_max = 7

line_colors = [

(0, 0, 255),

(0, 128, 255),

(0, 200, 200),

(0, 255, 0),

(200, 200, 0),

(255, 0, 0),

(255, 0, 255)

]

prev_pt = (0, 0)

r = 4

line_type = 8

for y in range(board_wh[1]):

color = line_colors[y % line_max]

for x in range(board_wh[0]):

pt = (int(pts[y * board_wh[0] + x][0]), int(pts[y * board_wh[0] + x][1]))

if x != 0:

cv2.line(img, prev_pt, pt, color, 1, line_type)

cv2.line(img, (pt[0] - r, pt[1] - r), (pt[0] + r, pt[1] + r), color, 1, line_type)

cv2.line(img, (pt[0] - r, pt[1] + r), (pt[0] + r, pt[1] + r), color, 1, line_type)

cv2.circle(img, pt, r + 1, color, 1, line_type)

prev_pt = pt

# draw pts

if 0 < x < board_wh[0] - 1:

cv2.putText(img, str(int(errors[y * (board_wh[0] - 2) + x - 1])), pt, cv2.FONT_HERSHEY_SIMPLEX, 0.3,

color, 1, line_type)

def is_corners_valid(self, pts, board_wh, ):

flag = True

errors = np.zeros(board_wh[1] * (board_wh[0] - 2))

for h in range(board_wh[1]):

for w in range(1, board_wh[0] - 1):

p1 = pts[h * board_wh[0] + w - 1] - pts[h * board_wh[0] + w]

p2 = pts[h * board_wh[0] + w + 1] - pts[h * board_wh[0] + w]

dot = np.dot(p1, p2.T)

length_1 = np.linalg.norm(p1)

length_2 = np.linalg.norm(p2)

if dot / (length_1 * length_2) <= -1:

error = 180

else:

error = np.arccos(dot / (length_1 * length_2)) * 180 / np.pi

errors[h * (w - 2) + w - 1] = error

if error < 170:

flag = False

return flag, errors

def save_params(self):

# print("Writing to file:", self.configs_chessboard["cameraPoseFile"])

# self.save_camera_yml(self.configs_chessboard["cameraPoseFile"], self.intrs, self.extrs, self.dists, self.imgSize)

for i in range(self.cameras_num):

print("camera_{}:".format(i))

print("reprojection err::{}\n camera_intrs:{}\n camera_extrs: {}\n camera_dists: {}".format(self.loss[i], self.intrs[i], self.extrs[i], self.dists[i]))

if __name__ == '__main__':

config_path = '/Users/lirunze/Documents/work/documents/data/数据处理/BYD-H/20230318/calib_param/calib_irCams_20230318.yml'

cci = CameraCalibrationIntrics(config_path)

cci.init_params()

cci.run()

cci.save_params()

这里主要的函数就是:

pts = cv2.findChessboardCorners(img, (board_width, board_height))[1]cv2.cornerSubPix(gray, pts, (12, 12), (-1, -1), (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_COUNT, 30, 0.1))det, intr, dist, _, _ = cv2.calibrateCamera(obj_pts, img_pts, self.imgSize, None, None)

最终函数的输出:

YAML data loaded successfully:

InitParams

Run

开始进行每个相机的内参标定

相机:1 起始:0 结束:25

相机:2 起始:25 结束:55

相机:3 起始:55 结束:86

相机:4 起始:86 结束:114

camera_0:

reprojection err:0.16779124924543037

camera_intrs:

[[1.50814411e+03 0.00000000e+00 6.14982150e+02]

[0.00000000e+00 1.50876797e+03 4.06210278e+02]

[0.00000000e+00 0.00000000e+00 1.00000000e+00]]

camera_extrs:

[[1. 0. 0. 0.]

[0. 1. 0. 0.]

[0. 0. 1. 0.]

[0. 0. 0. 1.]]

camera_dists: [[-0.4501263516756452, 0.2663145001806112, -0.00011518043908016835, -0.0013994951148625545, -0.3717059431585325]]

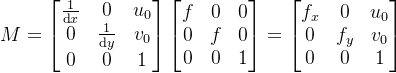

内参矩阵的具体表达式如下:

其中,$dx$和$dy$ 分别是每个像素在图像平面和方向上的物理尺寸,$(u_0,v_0)$是图像坐标系原点在像素坐标系中的坐标,$f$ 为摄像头的焦距,$f_x$,$f_y$为焦距与像素物理尺寸的比值,单位为个(像素数目)。

据此可以得到,这台摄像头的$f_x$和$f_y$大概为1508,说明焦距约等于1508个像素的物理尺寸。$u_0\approx 615$,$v_0\approx406$。这台摄像头的像素为1280×800,因此$u_0$的理论值应为640,$v_0$的理论值应为400。误差主要是目标坐标系的测量时带来的误差。

外参标定

参考下面相机标定总结中的外参标定方式

这里再次强调一下,对于同一个相机,相机的内参矩阵取决于相机的内部参数,无论标定板和相机的位置关系是怎么样的,相机的内参矩阵不变。这也正是在“求解内参矩阵”中,

2. 15相机标定

首先针对1号相机 也就是主相机用solvepnp输入3d点、2d点、相机内参、相机畸变,输出r、t

用projectPoints,输入3d点、相机内参、相机畸变、r、t,输出重投影2d点

计算原2d点和重投影2d点的距离作为重投影误差

// left 为12号相机

img_path_left = p["path_12"] + "/" + cv::format(p["fmt_12"].c_str(), camid_left, frame_id);

cv::Mat img_left = cv::imread(img_path_left);

std::vector<cv::Point2f> pts_left;

bool found = cv::findChessboardCorners(img_left,

cv::Size(n["board_width"],n["board_height"]), pts_left);

if(!found) continue;

//得到12号相机棋盘格二维corner点坐标

cv::Mat gray;

cv::cvtColor(img_left, gray, cv::COLOR_BGR2GRAY);

cornerSubPix(gray, pts_left, cv::Size(5, 5), cv::Size(-1, -1), cv::TermCriteria(cv::TermCriteria::EPS + cv::TermCriteria::COUNT, 30, 0.1));

cv::drawChessboardCorners(img_left, cv::Size(n["board_width"],n["board_height"]), pts_left, found);

// right为56号相机

img_path_right = p["path_56"] + "/" + cv::format(p["fmt_56"].c_str(), camid_right, frame_id);

cv::Mat img_right = cv::imread(img_path_right);

std::vector<cv::Point2f> pts_right;

found = cv::findChessboardCorners(img_right,

cv::Size(n["board_width"],n["board_height"]), pts_right);

if(!found) continue;

//得到56号相机棋盘格二维corner点坐标

cv::cvtColor(img_right, gray, cv::COLOR_BGR2GRAY);

cornerSubPix(gray, pts_right, cv::Size(5, 5), cv::Size(-1, -1), cv::TermCriteria(cv::TermCriteria::EPS + cv::TermCriteria::COUNT, 30, 0.1));

cv::drawChessboardCorners(img_right, cv::Size(n["board_width"],n["board_height"]), pts_right, found);

//solvepnp得到12相机姿态revc, tvec

cv::Mat revc, tvec;

cv::solvePnP(board3d, pts_left, campara_1234.camparam_.Ks[camid_left], campara_1234.camparam_.Ds[camid_left], revc, tvec);

//转换为外参矩阵

cv::Mat extr;

SE3FromAngleAxisdAndTranslation(revc, tvec, extr);

cv::Mat R, T;

R = extr.rowRange(0, 3).colRange(0, 3).clone();

T = extr.rowRange(0, 3).col(3).clone();

//将3d棋盘格坐标转换为相机坐标系中

std::vector<cv::Point3f> pts_chessboard_clouds;

for (int sub_obj = 0; sub_obj < board3d.size(); sub_obj++) {

cv::Point3f sub_obj_point = board3d[sub_obj];

cv::Mat tmp = (cv::Mat_<double>(3, 1) << sub_obj_point.x, sub_obj_point.y, sub_obj_point.z);

cv::Mat pt = R*tmp + T;

pts_chessboard_clouds.push_back(cv::Point3f(pt.at<double>(0, 0), pt.at<double>(1, 0),pt.at<double>(2, 0)));

}

//计算重投影误差

std::vector<cv::Point2f> pts_reproj_left;

cv::projectPoints(board3d,

revc,

tvec,

campara_1234.camparam_.Ks[camid_left],

campara_1234.camparam_.Ds[camid_left],

pts_reproj_left);

for(int _ = 0; _ < pts_reproj_left.size(); _++) {

cv::Point2f pt_repro = pts_reproj_left[_];

cv::circle(img_left, pt_repro, 2, cv::Scalar(0, 0, 255), -1);

}

cv::imwrite(img_path_left + "_corner.png", img_left);

计算12相机得到的棋盘格坐标的重投影结果,和56相机拍摄的棋盘格坐标误差

//存储相机位置的3d坐标和2d坐标

cv::Mat right_rvec, right_tvec;

for(int _ = 0; _ < pts_cames_valid.size(); _++) {

if (pts_cames_valid[_]) {

pts_chessboard_clouds.push_back(obj_cames[_]);

pts_right.push_back(pts_cames[_]);

}

}

//pnp得到56相机姿态right_revc, right_tvec

cv::solvePnP(pts_chessboard_clouds, pts_right, campara_56.camparam_.Ks[camid_right - 4], campara_56.camparam_.Ds[camid_right - 4], right_rvec, right_tvec, false, CV_EPNP);

cv::solvePnP(pts_chessboard_clouds, pts_right, campara_56.camparam_.Ks[camid_right - 4], campara_56.camparam_.Ds[camid_right - 4], right_rvec, right_tvec, true, CV_ITERATIVE);

//计算重投影误差

std::vector<cv::Point2f> pts_reproj_right;

cv::projectPoints(pts_chessboard_clouds,

right_rvec, right_tvec,

campara_56.camparam_.Ks[camid_right - 4],

campara_56.camparam_.Ds[camid_right - 4],

pts_reproj_right);

//计算12相机投影到56相机拍摄的棋盘格的坐标和本身计算的棋盘格坐标误差

double temp_error = 0;

for(int _ = 0; _ < pts_reproj_right.size(); _++) {

cv::Point2f pt_repro = pts_reproj_right[_];

cv::Point2f pt_corner = pts_right[_];

temp_error += ((pt_repro.x - pt_corner.x) * (pt_repro.x - pt_corner.x)) + ((pt_repro.y - pt_corner.y) * (pt_repro.y - pt_corner.y));

cv::circle(img_right, pt_repro, 2, cv::Scalar(0, 0, 255), -1);

cv::circle(img_right, pt_corner, 1, cv::Scalar(255, 0, 0), -1);

}

saveChessboard(pts_chessboard_clouds, img_path_left + "_chessboard.txt", cv::Size(n["board_width"],n["board_height"]));

cv::imwrite(img_path_right + "_reproj.png", img_right);

if(reproj_error > temp_error) {

reproj_error = temp_error;

rvec_min = right_rvec;

tvec_min = right_tvec;

min_reproj_error_path = img_path_right;

best_chessboard = pts_chessboard_clouds;

}

3.anchor点标定

通过将56相机拍摄的anchor图像坐标信息和对应相机参数来优化anchor点的相机坐标

**StereoLoss**对应于立体匹配的损失函数,用于优化左右两个相机的外参。**PoseErrorLoss**用于优化相机的位姿。**ShareLoss**将这些损失函数组合在一起,同时进行优化。

1. 最小化立体误差:

1. 缩放调整:

1. 最小化姿态误差:

4. ldmks图像坐标及对应3d坐标

ldmks通过人脸关键点检测得到, 3d坐标通过双目相机2d点位三角测量得到

/// \brief 计算3d点位

/// \param pts1 左侧图像上的2d点位

/// \param pts1 右侧图像上的2d点位

/// \param camid1 左侧相机的编号

/// \param camid2 右侧相机的编号

/// \param objpts 计算好的3d点位

/// \return 返回运行状态

GStatus MulitiCamerasParams::trianglate(std::vector<cv::Point2f> pts1,

std::vector<cv::Point2f> pts2,

int camid1,

int camid2,

std::vector<cv::Point3f>& objpts){

std::vector<cv::Point2f> undist_pts1, undist_pts2;

cv::undistortPoints(pts1, undist_pts1, camparam_.Ks[camid1], camparam_.Ds[camid1]);

cv::undistortPoints(pts2, undist_pts2, camparam_.Ks[camid2], camparam_.Ds[camid2]);

cv::Mat P1, P2;

P1 = camparam_.Ts[camid1].rowRange(0, 3);

P2 = camparam_.Ts[camid2].rowRange(0, 3);

cv::Mat pts_homo;

cv::triangulatePoints(P1, P2, undist_pts1, undist_pts2, pts_homo);

cv::convertPointsFromHomogeneous(pts_homo.t(), objpts);

return SUCCESS;

}